Steps to Implement a Canary Deployment Model Effectively

The canary deployment model is a progressive release strategy that introduces new software versions to a small subset of users before a full rollout. This approach helps you test changes in real-world conditions while minimizing risks. By limiting exposure, you can detect bugs early, gather user feedback, and ensure stability. Unlike traditional methods, canary deployment allows for quick rollbacks, reducing downtime and improving team confidence. It also enhances user experience by preventing widespread disruptions, making it an essential tool for modern, agile development teams.

Key Takeaways

Canary deployment lets you test updates with a small group first. This lowers risks and finds problems early.

Watching performance in real-time is very important during canary tests. Use tools like Prometheus and Grafana to check data and get user opinions.

Feature flags make canary deployments more flexible. They let you turn features on or off without undoing everything.

Slowly send more users to the canary version if it works well. This helps make changes smoothly and avoids big problems.

What Is a Canary Deployment Model?

Definition and Purpose

The canary deployment model is a progressive release strategy that allows you to introduce new software updates to a small group of users before rolling them out to everyone. This approach ensures that you can test changes in real-world conditions without risking the stability of your entire system. By gradually increasing the number of users exposed to the update, you can identify potential issues early and address them before a full-scale release. This method not only minimizes risks but also provides valuable insights into how the update performs under actual usage.

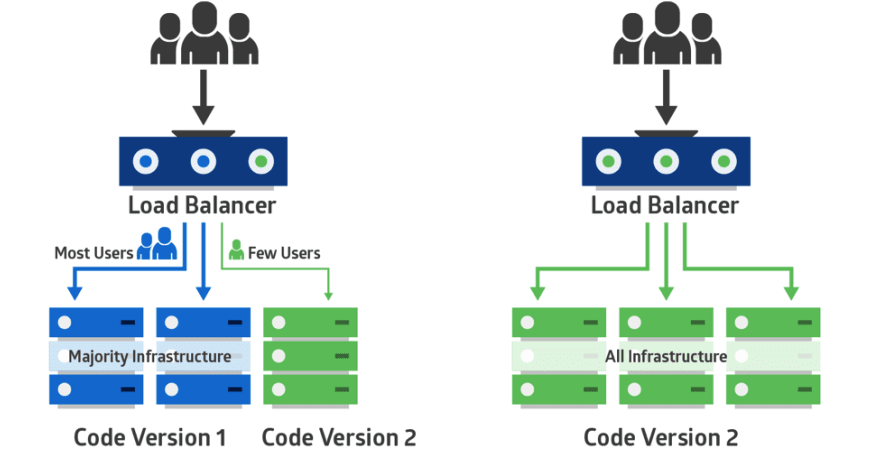

In practice, the canary deployment model functions by directing a small percentage of traffic to the new version while the majority of users continue using the stable version. This setup enables you to monitor performance, gather feedback, and make adjustments as needed. If the update proves successful, you can scale it up to the rest of your user base. If problems arise, you can roll back the changes with minimal disruption.

Key Characteristics of a Canary Deployment

Several features make the canary deployment model unique and effective:

It involves a phased or incremental rollout, where updates are introduced to a specific group of users first.

Real users test the new version, providing authentic feedback and uncovering issues that might not surface during internal testing.

Traffic routing plays a crucial role, as it allows you to control how much traffic is directed to the canary version.

The model supports quick rollbacks, ensuring that only a small subset of users experiences any issues.

These characteristics align well with modern DevOps practices, such as continuous integration and delivery (CI/CD). They also enhance the reliability of your applications by enabling controlled, incremental releases.

How It Differs from Other Deployment Strategies

The canary deployment model stands out from other strategies like blue-green deployment. While blue-green deployment involves switching between two separate environments, canary deployment uses a single environment and gradually shifts traffic to the new version. This makes it more resource-efficient and better suited for scenarios where you want to test updates with real users.

Unlike rolling deployments, which focus on updating specific servers, canary deployment targets a subset of users. This user-focused approach allows you to gather actionable feedback and monitor the impact of changes in real time. Additionally, the gradual rollout process reduces the risk of widespread issues, making it a safer choice for high-stakes updates.

By combining risk mitigation, real-time feedback, and scalability, the canary deployment model offers a balanced and effective way to manage software updates.

Benefits of a Canary Deployment Model

Risk Mitigation and Stability

The canary deployment model provides a reliable way to reduce risks during software updates. By releasing updates incrementally, you can ensure stability while minimizing the impact of potential issues. Exposing the new version to a small group of users allows you to detect bugs early and address them before they affect the broader audience. This approach protects your system and enhances user trust.

Canary deployment offers a practical way to release updates incrementally, reducing risks and ensuring stability.

Some measurable benefits include:

Reduced impact on the user base

Improved user experience

Cost efficiency

When deploying a new feature or update that could disrupt users, this model ensures granular control over the rollout process. You can strategically plan releases and mitigate risks effectively.

Performance Monitoring and Feedback

Performance monitoring plays a critical role in the success of a canary deployment. Real-time observability helps you detect anomalies or issues in the canary version, enabling immediate corrective actions. Monitoring metrics like request latency, error rates, and resource utilization ensures the canary version performs as expected compared to the stable version. For example, tracking CPU and memory usage can help you identify bottlenecks and prevent performance degradation.

Additionally, user feedback gathered during the canary phase provides valuable insights. This feedback allows you to refine the update and ensure it meets user expectations. Combining performance monitoring with user feedback enhances overall system reliability and user satisfaction.

Scalability and Gradual Rollout

The canary deployment model excels in scalability. By gradually increasing the percentage of traffic directed to the canary version, you can assess its ability to handle higher loads. Metrics like request throughput help you evaluate whether the new version can scale effectively. This gradual rollout process ensures a smooth transition from the stable version to the updated one.

When deploying a new version of an application that is not fully tested, this model allows you to test scalability without risking the entire system. It also provides a controlled environment to gather real-time feedback from users before a full release. This combination of scalability and control makes the canary deployment model an ideal choice for high-stakes updates.

Enhanced User Experience and Business Confidence

The canary deployment model significantly improves user experience by ensuring that updates are stable and reliable before reaching your entire audience. When you release a new feature or update, only a small group of users interacts with it initially. This approach allows you to identify and resolve issues early, ensuring that the broader user base continues to enjoy a seamless experience with the stable version. By minimizing disruptions, you maintain user trust and satisfaction.

You can also use this model to gather valuable feedback from real users during the early stages of the rollout. This feedback helps you refine the update and align it with user expectations. For example, if users report performance issues or usability concerns, you can address these problems before scaling the update to everyone. This iterative process ensures that the final version meets high-quality standards.

From a business perspective, canary deployment builds confidence in your development and operations teams. Gradual rollouts reduce the risk of widespread failures, which can harm your brand reputation. By monitoring key performance metrics and user behavior, you can make data-driven decisions about whether to proceed with the rollout or make adjustments. This proactive approach minimizes risks and enhances operational efficiency.

Feature flags further enhance this process by allowing you to control individual features within the deployment. If a specific feature causes issues, you can disable it without rolling back the entire update. This flexibility ensures that your business remains agile and responsive to user needs.

Incorporating canary deployment into your release strategy not only improves user satisfaction but also strengthens your business's ability to deliver reliable and innovative solutions.

Tools and Services for Canary Deployment

Cloud-Native Tools

Kubernetes and Istio

Kubernetes and Istio are essential tools for implementing a canary deployment. Kubernetes provides traffic routing mechanisms that allow you to direct a portion of user traffic to the canary version. This capability ensures a controlled and gradual rollout. Istio, a service mesh, enhances this process by enabling fine-grained traffic management. It lets you define rules for routing traffic, monitor performance, and ensure stability during the deployment. Together, these tools create a robust foundation for managing canary deployments effectively.

Canary Deployment in AWS (AWS CodeDeploy)

AWS CodeDeploy simplifies the process of canary deployment in AWS environments. It automates the gradual rollout of updates by directing a small percentage of traffic to the new version. You can monitor the performance of the canary version and adjust the rollout based on real-time data. AWS CodeDeploy also supports automatic rollbacks, ensuring minimal disruption if issues arise. This tool integrates seamlessly with other AWS services, making it a powerful choice for managing deployments in cloud-native applications.

CI/CD Platforms

Jenkins

Jenkins supports canary deployments by automating the entire deployment pipeline. It handles repetitive tasks, such as building, testing, and deploying code, reducing the risk of human error. Jenkins also includes features like traffic splitting and automatic rollbacks, which enhance the safety and efficiency of your deployment process. By using Jenkins, you can maintain a high deployment frequency while ensuring stability.

GitLab CI/CD

GitLab CI/CD offers robust support for canary deployments. It automates the deployment process and provides guardrails to prevent failed updates from reaching production. Features like progressive delivery and feature flags allow you to control the rollout and monitor performance. GitLab CI/CD ensures that your deployments are both frequent and reliable, making it an excellent choice for modern development teams.

Monitoring and Observability Tools

Prometheus and Grafana

Prometheus and Grafana are widely used for monitoring and observability in canary deployments. Prometheus collects performance metrics, such as request latency and error rates, while Grafana visualizes this data through customizable dashboards. These tools provide real-time observability, allowing you to detect anomalies and address issues immediately. Their alerting systems notify you of critical problems, ensuring a smooth deployment process.

Datadog

Datadog offers comprehensive monitoring for canary deployments. It tracks logs, metrics, and traces in real time, helping you identify performance bottlenecks and errors. Datadog's ability to monitor CPU and memory utilization ensures that your canary version can handle increased traffic. By using Datadog, you can gain detailed insights into the performance of your application and make data-driven decisions during the rollout.

Feature Flags and Traffic Management Tools

Feature Flags (e.g., LaunchDarkly)

Feature flags play a critical role in making your canary deployment strategy more flexible and reliable. These tools allow you to enable or disable specific features without redeploying your application. By treating features as independent units, you can isolate issues and roll back problematic features without affecting the rest of your deployment. This approach reduces downtime and simplifies troubleshooting.

For example, if a new feature in your canary deployment causes errors, you can deactivate it instantly using a feature flag. This prevents the need for a full rollback, saving time and resources. Tools like LaunchDarkly provide an intuitive interface for managing feature flags. They also integrate seamlessly with CI/CD pipelines, enabling you to automate feature toggles during deployments.

Feature flags are especially useful when deploying multiple features simultaneously. In many cases, a single deployment may include several updates. If one feature fails, you can disable it while keeping the others live. This ensures a stable user experience and minimizes disruptions. Combining feature flags with canary deployment in aws environments enhances your ability to deliver high-quality updates quickly and efficiently.

Load Balancers (e.g., NGINX, HAProxy)

Load balancers are essential for managing traffic during a canary deployment. They distribute incoming requests between the stable and canary versions of your application. This ensures that only a small percentage of users interact with the new version initially. Tools like NGINX and HAProxy allow you to configure traffic routing rules with precision.

For instance, you can use a load balancer to direct 5% of traffic to the canary version while the remaining 95% continues to use the stable version. As the canary version proves its reliability, you can gradually increase the traffic percentage. This controlled rollout minimizes risks and ensures a smooth transition.

Load balancers also provide monitoring capabilities. They track metrics like request latency and error rates, helping you evaluate the performance of the canary version. By combining load balancers with tools like AWS CodeDeploy, you can automate traffic shifts and rollbacks. This makes managing canary deployment in aws environments both efficient and scalable.

Tip: Use load balancers alongside feature flags to gain maximum control over your canary deployments. This combination allows you to manage traffic and features independently, ensuring a seamless user experience.

Step-by-Step Guide to Implementing a Canary Deployment Model

Step 1: Prepare the Deployment Environment

Before you initiate canary deployment, ensure your environment is ready for the process. Follow these steps to set up a robust foundation:

Establish metrics and monitoring systems to track application performance. Tools like Prometheus and Grafana can help you monitor performance in real time.

Implement CI/CD pipelines to automate deployments and enable reliable rollbacks. Jenkins or GitLab CI/CD are excellent options for this purpose.

Use a load balancer to manage traffic distribution between the stable and canary versions. This ensures flexible traffic allocation and granular control over the rollout.

Consider Kubernetes for managing deployments. It simplifies traffic routing and scaling, making it easier to handle complex environments.

Additionally, define deployment configurations, such as skaffold.yaml, to streamline the process. These configurations ensure consistency and reduce errors during deployment. By preparing your environment thoroughly, you set the stage for a smooth and controlled rollout.

Step 2: Configure Traffic Routing

Traffic routing is a critical step in the canary deployment model. You need to route traffic strategically to test the canary version while minimizing risks. Here are some best practices:

Use service meshes like Istio or Linkerd for advanced traffic management. These tools allow you to split traffic between the stable and canary versions with precision.

Leverage load balancers with weighted routing to control traffic distribution. For example, you can direct 5% of traffic to the canary version while keeping 95% on the stable version.

Implement DNS-based traffic routing for added flexibility. Assign different DNS records to the canary and stable versions, making it easy to adjust traffic flow or roll back if needed.

Combine feature flags with traffic routing to enable or disable specific features dynamically. This approach provides granular control over the deployment process.

Gradually shift traffic to the canary version as it proves stable. This incremental approach minimizes disruptions and ensures a seamless transition for users.

Step 3: Monitor Performance and Collect Feedback

Once the canary version is live, monitor performance closely and gather user feedback to evaluate its success. Focus on these key areas:

Set up robust monitoring and alerting systems. Tools like Datadog or Prometheus can track metrics such as request latency, error rates, and resource utilization.

Analyze logs and error reports to identify potential issues. Real-time observability helps you detect anomalies and take swift action.

Collect user feedback to understand how the canary version performs in real-world conditions. Gradual rollouts allow you to refine the update based on user insights.

For example, if users report usability concerns, you can address them before scaling up. By combining performance monitoring with feedback analysis, you ensure the canary deployment meets both technical and user expectations.

Tip: Always monitor scaling metrics to assess whether the canary version can handle increased traffic. This ensures a smooth transition to full deployment.

Step 4: Roll Back or Scale Up

Roll back specific features using feature flags if issues arise.

When issues arise during a canary deployment, you need a quick and efficient way to address them. Feature flags provide a powerful solution. These tools allow you to disable problematic features in the canary version without affecting the rest of the deployment. This approach ensures that the stable version remains unaffected while you resolve the issue.

For example, if a new feature causes errors or impacts performance, you can deactivate it instantly using a feature flag. This avoids the need for a full rollback, saving time and reducing complexity. By isolating the problematic feature, you maintain granular control over the deployment process. This method also minimizes disruptions for users and keeps your system stable.

Scale up to full deployment if the canary version is successful.

If the canary version performs well, you can proceed with scaling it up to the entire user base. Gradually increase traffic distribution to the new version while monitoring key metrics like error rates and resource utilization. Tools like load balancers or service meshes help you route traffic effectively during this phase.

A successful incremental rollout ensures that the transition from the stable version to the updated one is seamless. Continue collecting user feedback during this process to refine the deployment further. By scaling up carefully, you reduce risks and deliver a reliable update to all users.

Step 5: Automate and Optimize the Process

Use CI/CD pipelines for automation.

Automation is essential for streamlining your canary deployment. CI/CD pipelines handle repetitive tasks like building, testing, and deploying code. These pipelines also integrate with tools for traffic distribution and performance monitoring, ensuring a smooth rollout.

For instance, you can configure your CI/CD pipeline to automate traffic shifts between the stable and canary versions. This reduces manual effort and minimizes the risk of errors. Automation also enables faster deployments, allowing you to maintain a high release frequency without compromising quality.

Continuously refine the deployment process.

Optimization is an ongoing process. Regularly review your deployment strategy to identify areas for improvement. Analyze metrics from previous deployments to refine traffic distribution rules and enhance performance monitoring systems.

Incorporate user feedback into your optimization efforts. This ensures that your updates align with user expectations and deliver maximum value. By continuously improving your deployment process, you can achieve greater efficiency and reliability over time.

Tip: Combine automation with feature flags to gain maximum flexibility. This approach allows you to manage features and traffic independently, ensuring a smooth and controlled deployment.

Comparing Different Methods of Canary Deployment

Split Client vs. Weighted Mode

Definition and Use Cases for Split Client

The split client method assigns users to specific versions of your application based on predefined rules. These rules often rely on user attributes like geographic location or user ID. This method works well for scenarios requiring granular control, such as A/B testing or targeting specific user groups. For example, you can test a new feature with users in a particular region while others continue using the stable version. Split client deployments allow you to gather precise feedback from a controlled audience.

Definition and Use Cases for Weighted Mode

The weighted mode method distributes traffic between the stable and canary versions using predefined percentages. This approach often uses weighted round-robin algorithms to ensure consistent traffic distribution. Weighted mode is ideal for scenarios requiring scalability and simplicity. For instance, you can direct 10% of traffic to the canary version and gradually increase it as confidence grows. This method suits large-scale deployments where even traffic distribution is critical.

Feature | Split Client | Weighted Mode |

|---|---|---|

Pros | 1. Granular Control | 1. Simple Configuration |

| | 2. Flexible Traffic Allocation | 2. Consistent Traffic Ratios | | | 3. Good for A/B Testing | 3. Scalable | | Cons | 1. Complex Configuration | 1. Less Granular Control |

| | 2. Not Perfectly Even | 2. Static Allocation | | | 3. Limited Scope | 3. Not Ideal for A/B Testing |

Pros and Cons of Each Method

Advantages and Limitations of Split Client

The split client method offers granular control and flexibility. You can allocate traffic based on specific user attributes, making it perfect for A/B testing. However, its configuration can be complex, and traffic distribution may not always be perfectly even. Additionally, its scope is limited, as it targets specific user groups rather than the entire audience.

Advantages | Limitations |

|---|---|

Granular Control | Complex Configuration |

Flexible Traffic Allocation | Not Perfectly Even |

Good for A/B Testing | Limited Scope |

Advantages and Limitations of Weighted Mode

The weighted mode method simplifies traffic distribution with consistent ratios. It scales well for large deployments and uses weighted round-robin algorithms to manage traffic effectively. However, it lacks the granular control of split client methods and uses static allocation, which may not suit dynamic testing scenarios. It is also less effective for A/B testing.

Advantages | Limitations |

|---|---|

Simple Configuration | Less Granular Control |

Consistent Traffic Ratios | Static Allocation |

Scalable | Not Ideal for A/B Testing |

Tip: Choose the method that aligns with your deployment goals. Use split client for targeted testing and weighted mode for scalable rollouts.

Implementing a canary deployment model effectively involves several critical steps. You need to automate the deployment process to reduce human error and ensure consistency. Gradually shifting traffic to the canary version minimizes risks while robust monitoring systems track performance and user feedback. Testing in production-like environments ensures accurate assessments, and feature flags provide fine-grained control over individual features.

Feature flags, monitoring, and gradual rollouts are essential for success. Feature toggles allow you to enable or disable features instantly, ensuring quick rollbacks when needed. Continuous monitoring detects anomalies early, while gradual rollouts reduce the impact of potential issues by starting with a small user group.

Automation and tools play a pivotal role in streamlining deployments. CI/CD pipelines, monitoring platforms, and traffic management tools ensure efficiency and reliability. By combining these strategies, you can deliver high-quality updates with confidence and maintain a seamless user experience.

FAQ

What is the main purpose of a canary deployment model?

The canary deployment model helps you release updates gradually. It allows you to test changes with a small group of users first. This approach minimizes risks, ensures stability, and provides real-world feedback before a full rollout.

How do feature flags enhance canary deployments?

Feature flags let you enable or disable specific features without redeploying. If a feature causes issues, you can deactivate it instantly. This flexibility reduces downtime, simplifies troubleshooting, and ensures a stable user experience during deployments.

What tools are essential for monitoring a canary deployment?

You should use tools like Prometheus and Grafana for real-time performance monitoring. These tools track metrics such as error rates and latency. They also provide alerts for anomalies, helping you address issues quickly.

How do you decide when to scale up a canary deployment?

Monitor key metrics like error rates, resource usage, and user feedback. If the canary version performs well and meets expectations, gradually increase traffic. This ensures a smooth transition to full deployment.

Can you roll back a canary deployment without affecting users?

Yes, you can roll back using feature flags or traffic routing tools. Feature flags allow you to disable problematic features instantly. Traffic routing tools let you redirect users back to the stable version, minimizing disruptions.

See Also

Understanding the Canary Deployment Pattern and Its Functionality

A Simple Explanation of Blue Green Deployment in DevOps

How Blue Green Deployment Strategy Minimizes Downtime Effectively