Introduction

FeatBit, as an open-source feature flag platform, needs to track feature flag usage data and custom event data to monitor the performance of its SDKs. Handling hundreds or even thousands of events per second is common. For various reasons, this data must be inserted and stored in PostgreSQL rather than OLAP databases like ClickHouse, making it essential to choose an efficient processing and storage solution.

Methods for Bulk Inserting Data into PostgreSQL in .NET

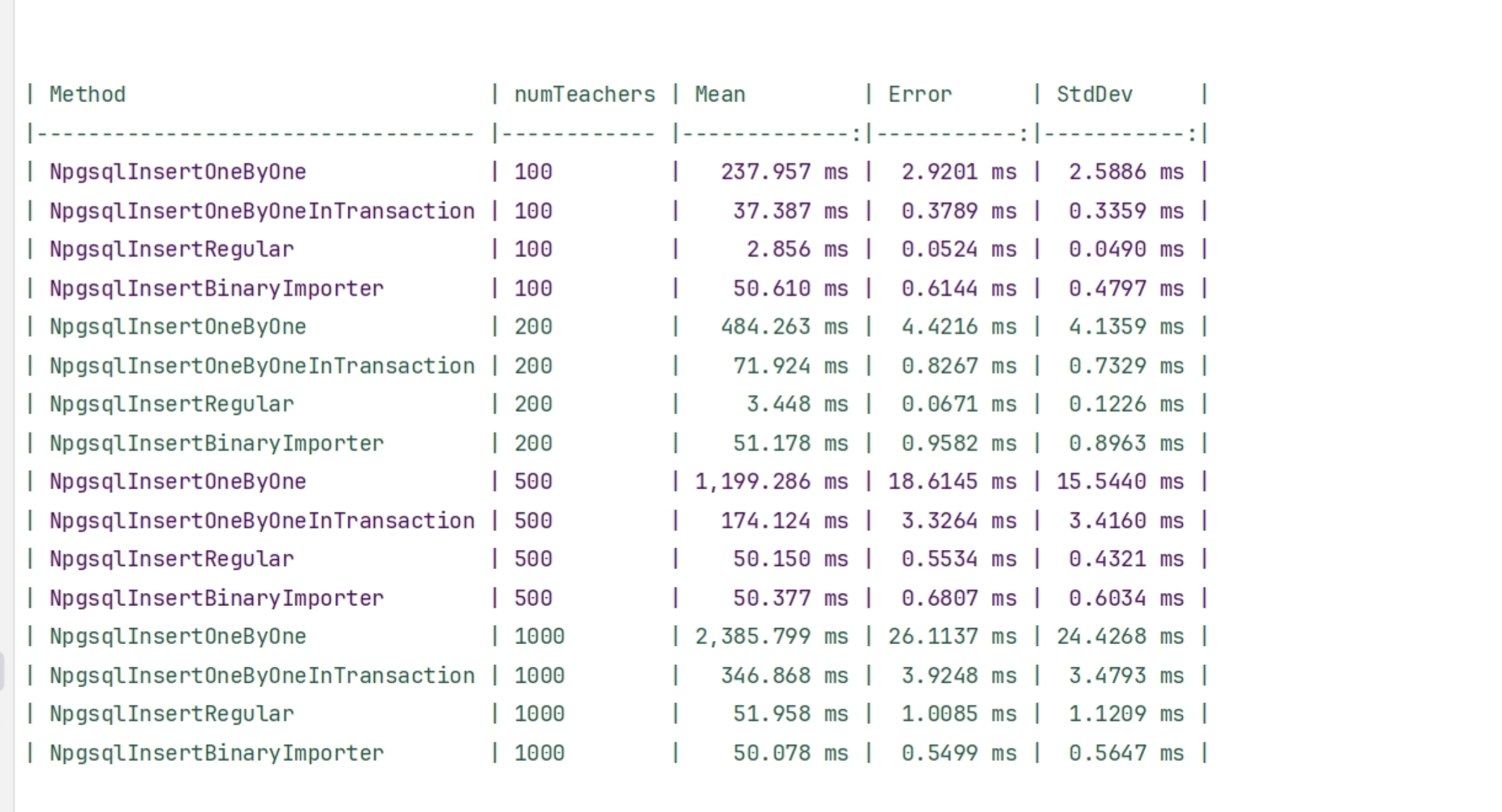

Many articles, such as Benchmarks of PostgreSQL .NET, highlight that bulk inserts are a highly effective way to insert large volumes of data into a PostgreSQL database, significantly outperforming row-by-row inserts.

In .NET applications, there are three common methods for bulk inserting data into PostgreSQL:

- Dapper Plus - A powerful solution but requires a paid license, making it less suitable for our use case.

- Entity Framework Bulk Extensions - Conditionally free, meaning that in some cases, a paid license may be required, which also makes it less attractive for a simple feature like bulk inserts.

- NpgSql's BinaryImport - A built-in approach using PostgreSQL's native COPY command, which avoids additional dependencies.

We Chose NpgSql's BinaryImport for Our Project

After evaluating these options, we opted for Npgsql's BinaryImport as our bulk insert solution. The main reason for choosing BinaryImport is to avoid external dependencies and licensing costs for such a straightforward functionality.

To validate this choice, we conducted a simple performance test comparing different bulk insert methods available in Npgsql.

When to Use Different Methods

If the number of items in a bulk insert is relatively low (e.g., fewer than 1,000), you can use the InsertRegular method to insert data.

public void BulkInsertRegular(IEnumerable<Teacher> teachers)

{

// using raw SQL here as opposed to a parameterized approach because it's faster

cmd.CommandText = "INSERT INTO teachers (first_name, last_name, subject, salary) VALUES " +

(String.Join(',',teachers.Select(t => $"('{t.FirstName}','{t.LastName}','{t.Subject}',{t.Salary})")));

cmd.ExecuteNonQuery();

}

Code Source from michaelscodingspot.com

However, as the number of records increases, the performance of standard INSERT degrades, making BinaryImport the preferred approach for handling larger volumes efficiently.

Using BinaryImport for High-Volume Inserts

If the number of items is high (e.g., more than 1,000), the InsertBinary method is a better choice. As the number of items increases, the performance of InsertBinary remains more stable, whereas the performance of InsertRegular tends to degrade.

await dbContext.Database.OpenConnectionAsync();

try

{

var conn = dbContext.Database.GetDbConnection() as NpgsqlConnection;

await using var writer = await conn!.BeginBinaryImportAsync(

"COPY events (id, distinct_id, env_id, event, properties, timestamp) FROM STDIN (FORMAT BINARY)"

);

foreach (var insight in insights)

{

var values = (object[])insight;

await writer.StartRowAsync();

await writer.WriteAsync(values[0], NpgsqlDbType.Uuid);

await writer.WriteAsync(values[1], NpgsqlDbType.Varchar);

await writer.WriteAsync(values[2], NpgsqlDbType.Varchar);

await writer.WriteAsync(values[3], NpgsqlDbType.Varchar);

await writer.WriteAsync(values[4], NpgsqlDbType.Jsonb);

await writer.WriteAsync(values[5], NpgsqlDbType.Timestamp);

}

await writer.CompleteAsync();

}

finally

{

await dbContext.Database.CloseConnectionAsync();

}

Code Source from FeatBit's Github Repository